- Brew install aquaterm terminal command pdf#

- Brew install aquaterm terminal command code#

- Brew install aquaterm terminal command plus#

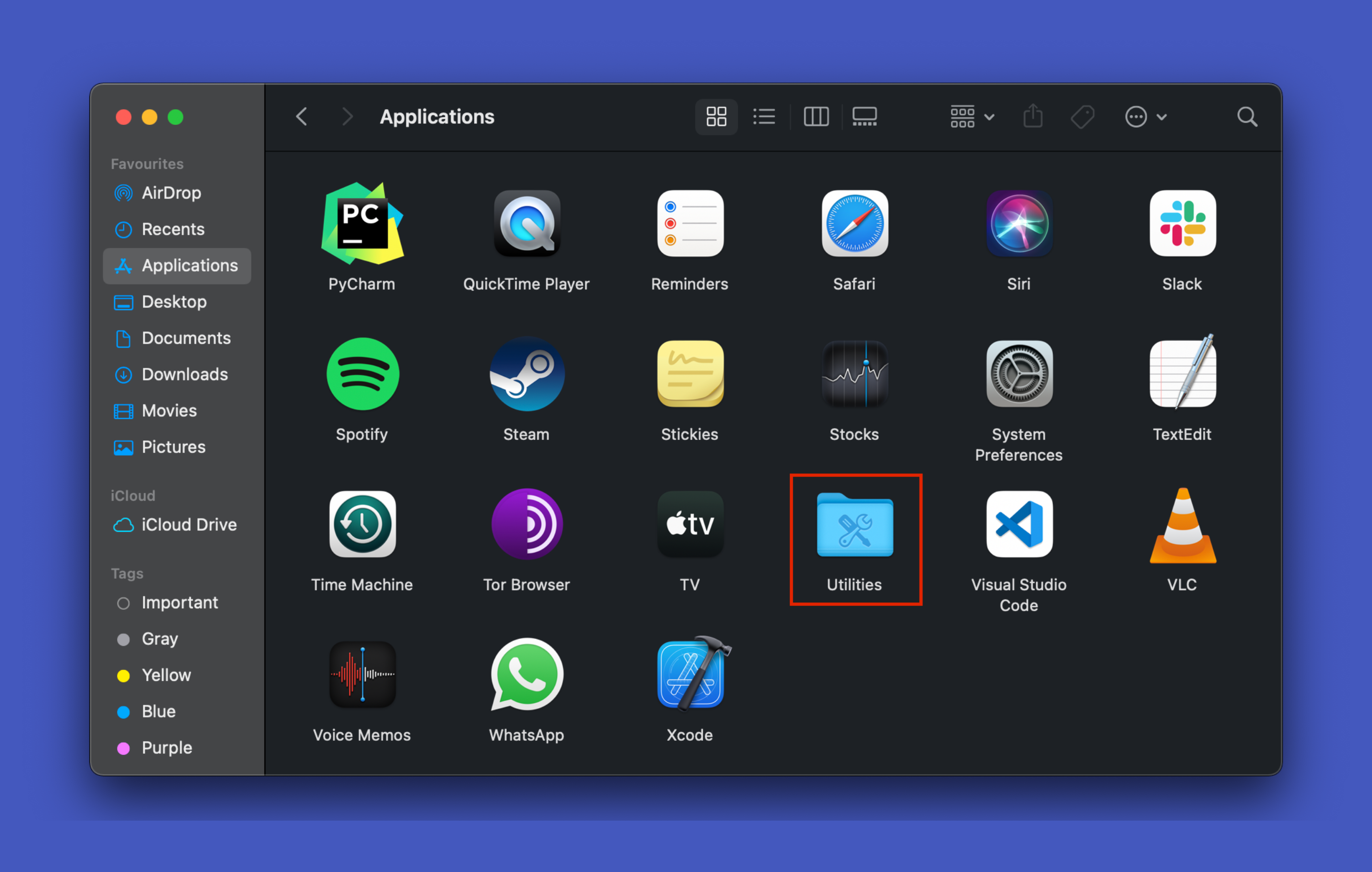

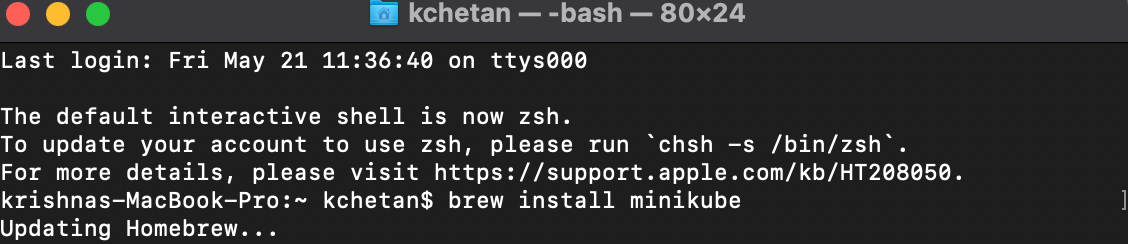

There’s a lot of power to unpack here, so feel free to dive in– check out our original guide for tons of useful info. Here at How-To Geek, for example, put a 1 pixel black border on our images, and I do it with this command:Ĭonvert -border 1x1 -bordercolor black testing.png result.png You can do a lot more than just convert files, too. You’ll need to reset your dock for the new icon to show up:

Brew install aquaterm terminal command code#

RSNNS is a wrapper to C code for the SNNS system (Stuttgart Neural Network Simulator).You can read more details on the ansiweather GitHub page, if you’re interested. RSNNS: This took 3 hours, and i'm not sure what it built, and regardless the results weren't even that good (though I don't have a point of comparison, yet). Does it hang because i'm using R 3.03? Not 2.9?

Brew install aquaterm terminal command pdf#

The pdf has been recently updated - the code hasn't. Maybe it was because I didn't hide the dead constant columns. Neuralnet: This package just kept hanging on me on the Watson Dataset! Regardless of the settings i chose.

Nnet: A pretty fast and good implementation of single-layer (perceptron) neural networks

Brew install aquaterm terminal command plus#

Plus it will run into memory limitations at some point. Multi-layer neural networks in R seem to be implemented in the RSNNS, but there are no good guides yet. But frankly I think you're still stuck in the 80s. If you want to do single hidden layer networks, R has good implementations and guides to help you get there. Right now, R is probably not the way to go if you want to do neural networks, or at least prototype them. This post maybe be completely obvious to many who actually know Neural Networks given your probably experience in statistical software, but it needs to be said anyways. I should also look for more SVM implementations, but this one seems fine. Perhaps next i'll do the same on a giant public data set, record how long it took for the short vs long data set. But it's okay, that was just one test case! Unfortunately this gave marginally worse results. We then couldn't figure out straight up cross-validation, but we did figure out tuning which incorporates cross validation - it searches for the best parameters! We made Radial Basis Function and Sigma SVM's, and compared the results on the churn data set, training and test. Sigmoid is the SVM of choice? Wait, I think that is for neural networks. Which uses the package e1071 - why the heck is it called that? The kernlab package and methods seem super complicated, so instead i've been using the guides from Dr.

0 kommentar(er)

0 kommentar(er)